0、任务需求

采集摄像头、烟雾传感器、温湿度传感器 (DHT11)、火焰传感器、光敏传感器

执行器:风扇、花洒、led灯

pc端订阅:video_stream、shu_data pc端发布:pc_data

树莓派:发布视频流到:video_stream, 发布数据:shu_data,接收(订阅)数据在:pc_data

温度:tem 温度阈值: th_tem

湿度:hum 湿度阈值:th_hum

空气质量:mq2 空气阈值: th_mq2

光照:light 光照阈值: th_light

火焰: fire

0、 树莓派的基本操作

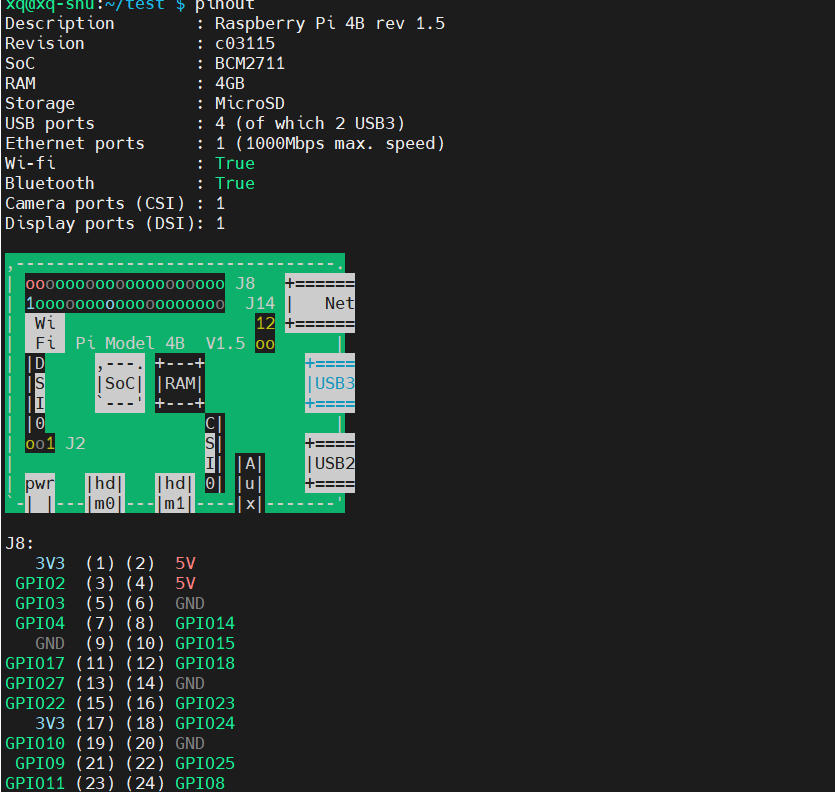

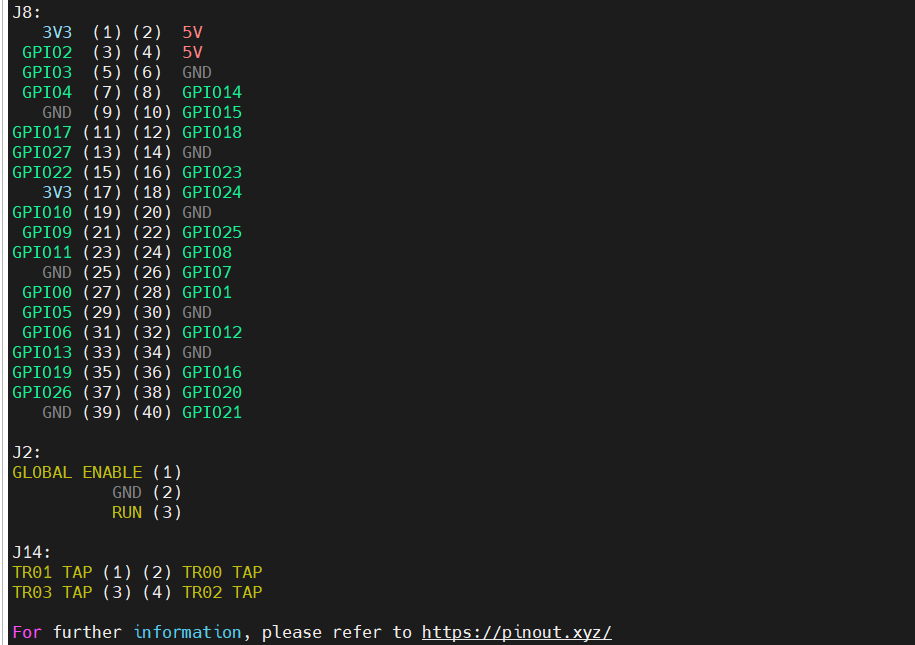

1、如何查看树莓派的引脚资源

pinout

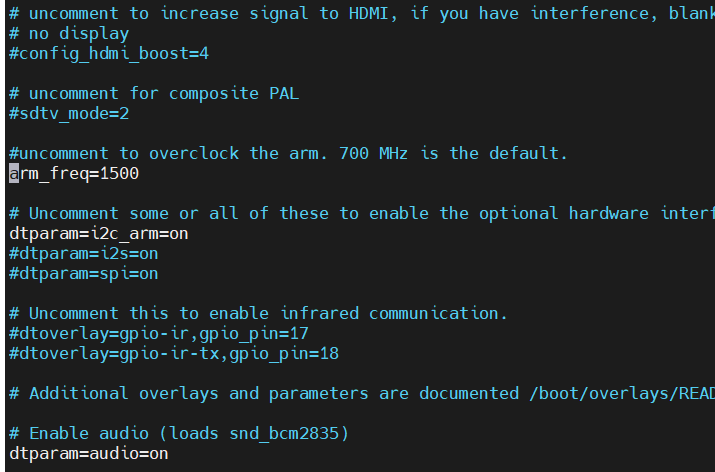

2、固定CPU的频率

树莓派4B全核心最大频率为1.5 GHz,单核最大频率为1.5 GHz

所以可以设置cpu的频率为1.5GHz

方法一

用cpufrequtils工具

sudo apt install cpufrequtils #安装该工具

alias get_cpu='sudo cpufreq-info -w -m' #在.bashrc中定义快捷键,用于查看cpu频率

alias set_cpu='sudo cpufreq-set -f 1.5GHz' #在.bashrc中定义快捷键,用于设置cpu频率(固定频率)

alias reset_cpu='sudo cpufreq-set -g ondemand' #在.bashrc中定义快捷键,用于恢复cpu频率调节的默认方式(ondemand)方法二(推荐)

修改/boot/config.txt文件

sudo nano /boot/config.txt

# 放开修改 arm_freq=1500

查看是否成功

get_cpu3、设置一个树莓派启动的时候自己设置环境的脚本

sudo nano /etc/rc.local

# 在rc.local的exit 0前面设置 /home/xq/startup.sh启动脚本的位置

/bin/bash /home/xq/startup.sh &1、qt编译mqtt

2、树莓派读取dht11

1、利用Adafruit_DHT

安装dht11驱动库

pip install Adafruit_DHTimport Adafruit_DHT

import time

sensor = Adafruit_DHT.DHT11

pin = 4 # 设置GPIO引脚号

while True:

humidity, temperature = Adafruit_DHT.read_retry(sensor, pin)

if humidity is not None and temperature is not None:

print('温度:{0:0.1f}°C 湿度:{1:0.1f}%'.format(temperature, humidity))

else:

print('读取失败,请检查传感器连接是否正确。')

time.sleep(2)

2、到时候补充,我自己试的时候一直读不出数据

3、使用usb摄像头在树莓派上做人脸识别

关于树莓派的摄像头如何使用我在 树莓派使用摄像头网络实时监控 | 哈哈 (hahaxiong0204.github.io)这个博客上已经说明好了。

并且在树莓派上必须安装好opencv,numpy

sudo apt-get install python3-opencv -y

sudo apt-get install numpy以下代码是通过mqtt向上位机传输数据的,也可以在树莓派上显示,打开对应的代码:cv2.imshow('image', img)

在文件路径下必须要有haarcascade_frontalface_alt2.xml文件

# face_my.py

import cv2

import os

import numpy as np

import pickle

import paho.mqtt.client as mqtt

import base64

import time

# MQTT连接参数

mqtt_broker = "192.168.137.1" # 请替换为PC的IP地址

mqtt_port = 1883

mqtt_topic = "video_stream"

# 创建MQTT客户端

client = mqtt.Client("RaspberryPi")

client.connect(mqtt_broker, mqtt_port, 60)

cap = cv2.VideoCapture(0)

cap.set(3, 640) # set video width

cap.set(4, 480) # set video height

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_alt2.xml')

current_id = 0

label_ids = {}

x_train = []

y_labels = []

def face_recognition():

num_count = 0

label_id_name = {}

with open("label.pickle", 'rb') as f:

origin_labels = pickle.load(f).items()

# print("源文件数据:" + origin_labels)

label_id_name = {v: h for h, v in origin_labels}

print(label_id_name)

recognizer = cv2.face.LBPHFaceRecognizer_create()

recognizer.read('my_trainer.xml')

while True:

ret, img = cap.read();

img = cv2.flip(img, 1) # flip video image vertically

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

num_count += 1

for (x, y, w, h) in faces:

if num_count >= 5:

id_, conf = recognizer.predict(gray[y:y + h, x:x + w])

print("id = ",label_id_name[id_],"conf = ",conf)

if 20 <= conf <= 54:

cv2.putText(img, str(label_id_name[id_]), (x + 5, y - 5), cv2.FONT_HERSHEY_SIMPLEX, 1,

(255, 255, 255), 2)

else:

cv2.putText(img, str("NULL"), (x + 5, y - 5), cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 255, 255), 2)

cv2.rectangle(img, (x, y), (x + w, y + h), (255, 0, 0), 2)

# cv2.imshow('image', img)

# ============================== 发送mqtt数据帧 ============================

encode_param = [int(cv2.IMWRITE_JPEG_QUALITY), 90]

result, encoded_frame = cv2.imencode('.jpg', img, encode_param)

if result:

# 将MJPEG格式的帧转换为base64编码

frame_base64 = base64.b64encode(encoded_frame.tobytes())

client.publish(mqtt_topic, frame_base64)

# time.sleep(1 / 5)

# ======================================================================

k = cv2.waitKey(100) & 0xff # Press 'ESC' for exiting video

if k == 27:

break

def input_face(name):

count = 0

all_num = 60

folder = os.path.exists('./dataset/' + name)

if not folder: # 判断是否存在文件夹如果不存在则创建为文件夹

os.makedirs('./dataset/' + name) # makedirs 创建文件时如果路径不存在会创建这个路径

print("创建文件夹成功")

while True:

ret, img = cap.read()

img = cv2.flip(img, 1) # flip video image vertically

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

# 创建文件夹

folder = os.path.exists('./dataset/' + name)

if not folder: # 判断是否存在文件夹如果不存在则创建为文件夹

os.makedirs('./dataset/' + name) # makedirs 创建文件时如果路径不存在会创建这个路径

print("创建文件夹成功")

for (x, y, w, h) in faces:

if count%20==0:

print("请输入任意键继续")

if count == 0:

print("请摆正你的脸,等待开启")

cv2.rectangle(img, (x, y), (x + w, y + h), (255, 0, 0), 2)

count += 1

path_jpg = "./dataset/" + name + '/' + str(count) + ".jpg"

cv2.imwrite(path_jpg, gray[y:y + h, x:x + w])

# cv2.imwrite(path_jpg, img)

print(path_jpg)

# cv2.imshow('image', img)

# ============================== 发送mqtt数据帧 ============================

encode_param = [int(cv2.IMWRITE_JPEG_QUALITY), 90]

result, encoded_frame = cv2.imencode('.jpg', img, encode_param)

if result:

# 将MJPEG格式的帧转换为base64编码

frame_base64 = base64.b64encode(encoded_frame.tobytes())

client.publish(mqtt_topic, frame_base64)

# time.sleep(1 / 5)

# ======================================================================

k = cv2.waitKey(100) & 0xff # Press 'ESC' for exiting video

if k == 27:

break

elif count >= all_num: # Take 30 face sample and stop video

break

# 训练人脸

def train_face():

global current_id

recognizer = cv2.face.LBPHFaceRecognizer_create()

for root, dirs, files in os.walk('dataset'):

# print(root, dirs, files)

for file in files:

path = os.path.join(root, file)

image = cv2.imread(path)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

image_array = np.array(gray, "uint8")

label = os.path.basename(root)

if label not in label_ids:

label_ids = current_id

current_id += 1

id_ = label_ids

faces = face_cascade.detectMultiScale(image_array, scaleFactor=1.5, minNeighbors=5)

for (x, y, w, h) in faces:

roi = image_array[y:y + h, x:x + w]

x_train.append(roi)

y_labels.append(id_)

with open("label.pickle", "wb") as f:

pickle.dump(label_ids, f)

print(label_ids)

recognizer.train(x_train, np.array(y_labels))

recognizer.save("my_trainer.xml")

# while True:

name = input("please input name :")

if len(name) != 0:

input_face(name)

train_face()

face_recognition()

cap.release()

cv2.destroyAllWindows()